11 minutes

Written: 2021-08-14 00:28 +0000

GSoC21 W10: LFortran Runtime Library Design

This post is part of the GSoC21: LFortran series.

The road to accurate elementary intrinsic functions is paved with IEEE/ISO standards

Background

Serialized update for the 2021 Google Summer of Code under the fortran-lang organization, mentored by Ondrej Certik.

Logistics

- Met with Ondrej daily from Sunday till Friday

- On Sunday we finished the compile time evaluation design (partially)

- Ondrej finished the rest overnight

- On Monday, we started co working on runtime implemenations

- Also added some more compile time stuff and pointers to clean things up

- Ondrej fixed a longstanding bug in the deserialise of serialized mod

- On Wednesday there was more scaffolding work done on the generics

- Added another implicit cast

- On Thursday we implemented an all fortran

sinfunction- Just as accurate as

gfortran - Compiles with LFortran

- Also fixed another serialization bug (floats were being truncated)

- Just as accurate as

- On Friday we redesigned the runtime library

- Also discussed future plans and longterm goals

- Opened discussions around the runtime library and elementary function accuracy on the Zulip instance

- Considered adding

fortranversions of LAMMPS (F77 and F90) to the compilation plans

- On Sunday we finished the compile time evaluation design (partially)

Overview

This was most definitely my most productive week by far.

New Merge Requests

- llvm: More values when possible (1113)

- Backend values from compile time evaluations

- kind: Compile time (1132)

- A rather thorny refactor; but one with great benefits

- asr: Clean up extract_kind (1136)

- Fruitful usage of the new functions

- Compile Time Intrinsic: Real (1114)

- A

castbased approach - selected_kinds: Body visitors (1167)

- Possibly my favorite MR, a nice pure

fortranimplementation backed by a roughsollyaapproximation - Initial sin implementation (1167)

- Possibly my favorite MR, a nice pure

fortranimplementation backed by a roughsollyaapproximation - sin: Rework runtime to get better approximations (1173)

- Possibly my favorite MR, a nice pure

fortranimplementation backed by a roughsollyaapproximation - Compile time floor (1176)

- Fairly standard implementation requiring casts

- Integer2real (1162)

- An implicit cast which had not previously been implement

- Compile time int (1169)

- Along the lines of

real() - Sincos tests (1194)

- Benchmarking and setting up of the new test harness

Freshly Assigned Issues

- Semantics: Implement correct handling of PARAMETER (510)

- ASR Pass: Order functions (507)

- Semantics: Implement FLOOR (A [, KIND]) (506)

- Semantics: Implement MODULO (A, [, KIND]) (504)

- [RFC] Compile Time Intrinsic Design (501)

- Strange Runtime issue with Implicit Casts (500)

- Implicit cast for parameter (499)

- Better handling of intrinsic module names (494)

Additional Tasks

Will write a closing report for next week.

Misc Logs

This was a really packed week. Much of the discussion has already taken place in the relevant issues and MRs; but some notes are in order for posterity.

Items to fix

- Some compile time function implementations are not standard compliatn

real

- Implicit casting rules fail for certain cases

- Notably for all

Arrays

- Notably for all

Strange bug when intrinsic functions are called on implicit cast

Generic Proceedures

This was fun to work on.

Fortran Runtime Intrinsics

A long term goal is to have a dog-fooded runtime library. We zeroed in on this design over on the wiki a few weeks ago. Now with generic proceedures in; we’ve begun implementing some of the intrinsic functions.

Simple Functions

The simplest ones are of course trivial:

1abs

Trignometric functions

For more “mathematical” methods, we needed a template. One we found

which was compatible with our license is the fdlibm versions. Consider

for sin:

Older compilers are much more readable compared to the newer ones.

Trignometric Functions

The quote is motivated by my (limited) reading of two texts in the field of implementing elementary functions, namely Muller (2016) and Muller et al. (2018).

Brainstorming Sin

- This and then the simpler version here

- Multiple interval based approximation used for AMD’s optimized sin

We were looking for a pedagogical, simple approach to calculating sin.

Unsurprisingly, the entire ecosystem of compilers seems to mostly follow

one implementation from the early 90s. Which is great, but Fortran

doesn’t really implement IEEE 754’s argument reduction routine (a.k.a.

quadmath). It turns out with a little massaging; the pedagogical

approach presented in this blog post by Christer

Ericson can be

adapted for use.

We needed to do slightly better. For starters, the errors of around \(10^{-16}\) could be stomached, but not the ones around \(10^{-10}\). Think of this as the same sort of problem as the standard fitting of a potential energy surface.

How do we test these? Import from the intrinsic, and rename to a different symbol, then run a loop.

Sollya and Function Approximations

Rather than go rooting around the annals of outdated compilers; and instead of boldly copying from existing libraries; an interesting approach is to calculate precise approximations including the Remez (or minmax), Taylor approximations, and Chebyshev approximations.

Thankfully testing this is trivial with Sollya. Since it does nothave a

terminal inbuild we need rlwrap too.

1nix-shell -p sollya rlwrap

Consider a trial implementation:

1! lfortran_intrinsic_sin.f90

2module lfortran_intrinsic_sin

3use, intrinsic :: iso_fortran_env, only: sp => real32, dp => real64

4implicit none

5

6interface sin

7 module procedure dsin

8end interface

9

10contains

11

12elemental real(dp) function dsin(x) result(r)

13real(dp), parameter :: pi = 3.1415926535897932384626433832795_dp

14real(dp), intent(in) :: x

15real(dp) :: y

16integer :: n

17if (abs(x) < pi/2) then

18 r = kernel_dsin(x)

19else ! fold to pi/2

20! https://realtimecollisiondetection.net/blog/?p=9

21 y = modulo(x, 2*pi)

22 y = min(y, pi - y)

23 y = max(y, -pi - y)

24 y = min(y, pi - y)

25 r = kernel_dsin(y)

26end if

27end function

28! Implement kernel_dsin with Sollya (read post)

The general approach to testing this is then:

1! test_sina.f90

2program main

3 use :: lfortran_intrinsic_sin, only: sin2 => dsin

4 real(kind=8) :: x,y

5 integer(kind=8) :: i

6 do i=1,1000000

7 y = real(i)/100.0

8 print*, y, abs(sin(y)-sin2(y))

9 end do

10end program

Which can be compiled with:

1gfortran -ffree-line-length-none lfortran_intrinsic_sin.f90 test_sina.f90 && ./a.out

Where the line length is important since we want to be able to copy and paste expressions from Sollya.

Remez

The test expressions can be generated as follows:

1nix-shell -p sollya rlwrap

2rlwrap -A sollya

3prec=120;

4f=sin(x);

5d=[-pi/2;pi/2];

6p=remez(f,16,d,default,1e-16,1e-30);

7p;

81.430896392112983438690712915149618875e-17 + x * (0.9999999999999989714281994951487325976 + x * (-3.349530544567829511297283045847078806e-16 + x * (-0.1666666666666470131512533841331726124 + x * (2.06038248351960358085265034956173799e-15 + x * (8.33333333322297677610158866083573121e-3 + x * (-5.200037492550081347598442689963074914e-15 + x * (-1.984126981335937217532222096272076263e-4 + x * (6.779661505197324337448766161086156236e-15 + x * (2.755731547177283303366066506722157076e-6 + x * (-4.994033469644991382509162854527493863e-15 + x * (-2.505182167856013032537240066679691216e-8 + x * (2.101039405474559290547827191709051192e-15 + x * (1.604654097883256924434495617086734883e-10 + x * (-4.71033700061518989435986419744537652e-16 + x * (-7.356804698944816248097669067062646244e-13 + x * 4.36551332303930597866240036869066221e-17)))))))))))))))

Where the signature of remez is

func, degree, interval, default=1, quality=1e-5, bounds=[0;infty]; Now

we can implement kernel_dsin as follows:

1elemental real(dp) function kernel_dsin(x) result(res)

2 real(dp), intent(in) :: x

3 res = 1.430896392112983438690712915149618875e-17_dp + x * (0.9999999999999989714281994951487325976_dp + x * (-3.349530544567829511297283045847078806e-16_dp + x * (-0.1666666666666470131512533841331726124_dp + x * (2.06038248351960358085265034956173799e-15_dp + x * (8.33333333322297677610158866083573121e-3_dp + x * (-5.200037492550081347598442689963074914e-15_dp + x * (-1.984126981335937217532222096272076263e-4_dp + x * (6.779661505197324337448766161086156236e-15_dp + x * (2.755731547177283303366066506722157076e-6_dp + x * (-4.994033469644991382509162854527493863e-15_dp + x * (-2.505182167856013032537240066679691216e-8_dp + x * (2.101039405474559290547827191709051192e-15_dp + x * (1.604654097883256924434495617086734883e-10_dp + x * (-4.71033700061518989435986419744537652e-16_dp + x * (-7.356804698944816248097669067062646244e-13_dp + x * 4.36551332303930597866240036869066221e-17_dp)))))))))))))))

4

5end function

Note that we need to use double precision for the numbers, so we can

replace SPACE+ with _dpSPACE+. We would like to also get some

statistics of the output, in bash just becuse we can 1.

1gfortran -ffree-line-length-none lfortran_intrinsic_sin.f90 test_sina.f90 && ./a.out > remez16

2awk 'NR == 1 || $2 < min {line = $0; min = $2}END{print line}' remez16

3awk 'NR == 1 || $2 > max {line = $0; max = $2}END{print line}' remez16

Taylor and Chebyshev

To extract information from the “certified” functions in Sollaya we

need to remember that the return value is a list.

1nix-shell -p sollya rlwrap

2rlwrap -A sollya

3prec=120;

4f=sin(x);

5d=[-pi/2;pi/2];

6p=chebyshevform(f,16,d);

7p[2];

8p[0];

9quit;

Similarly taylorform can be used to get Taylor series expansions.

Given that we know exactly what we want to generate; we can also simply

use sollya with an input script:

1sollya < taylorform16

Where taylorform16 contains:

1f=sin(x);

2d=[-pi/2;pi/2];

3p=taylorform(f,16,d);

4p[0];

This workflow can be easily extended into a script based runner.

Better Polynomials

Some basic understanding of series expansions and / or visual inspection

would make it clear that for sin at-least; the odd terms are

essentially \(0\). Sollya can handle these; as described in Muller

(2016). We opted to go the

rounding error route.

Rounding Errors

With Ondrej, we were able to isolate the inaccuracy in our pure

fortran implementation and we realized it stemmed from the manner in

which our argument reduction is carried out. This is a well known

problem with attempted solutions from 1980s Payne and Hanek

(1983) (including a

related implementation in

BSD)2.

In particular, as per this WG document of the SunPro

group

Ng (2006) describing the cannonical

implementation; the error is actually from the division and difference.

This is also touched upon elsewhere in the literature, Brisebarre et al.

(2005) and Defour et

al. (n.d.), along with Muller et al.

(2018) . Naturally one

of first places to check is the relevant ISO draft standard on

elementary

functions

(N462Pdf?) .

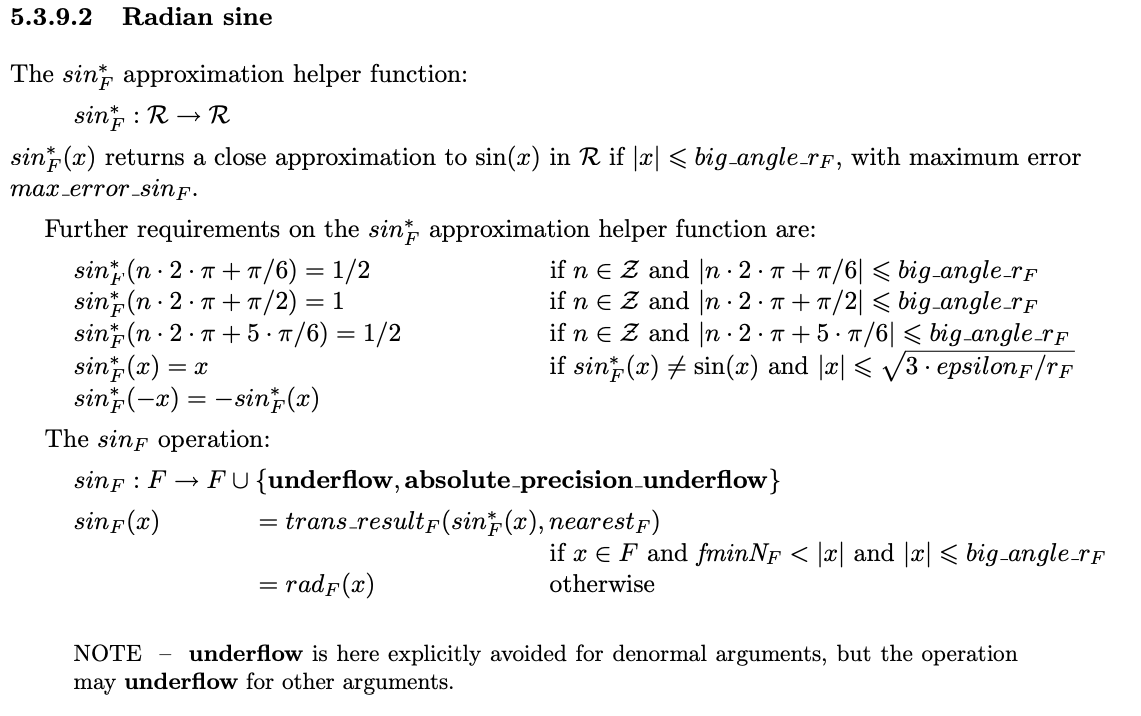

Figure 1: The ISO/IEC FCD 10967-2.4:1999(E) suggestion for sin

This isn’t immediately actionable; but might be something for later. Naturally the discussions here in context of IEEE 754 (IEEEStd754?) (which the Fortran standard does not fully support) and presumes basic knowledge of floating point numbers Goldberg (1991) . The clearest explanation for requiring higher precision for some constants in these reduction algorithms is given nicely by the draft standard:

For reduction of an argument given in radians, implementations use one or several approximate value(s) of π (or of a multiple of π), valid to, say, n digits. The division implied in the argument reduction cannot be valid to more than n digits, which implies a maximum absolute angle value for which the reduction yields an accurate reduced angle value.

Benchmarking and Testing

To make the benchmarking of new lfortran runtime intrinsic functions

as painless as the existing test runner framework; I ended up

implementing a new framework for these. Currently this takes different

compiler backends and a TOML file along with some Jinja2 templates and

makes a CMake based project which is built and gives out a grid of

points along with the gfortran values and abs differences. Some

things to do there:

- Plot things automatically

- Use non-uniform grids

- Other QoL changes

This is really easy to work with:

1cd src/runtime/pure/benchmarks

2nix-shell -p 'python38.withPackages(ps: with ps; [seaborn matplotlib click jinja2 tomli pandas])'

3# Or just micromamba install seaborn matplotlib pandas click jinja2 tomli

4python gen_test.py --execute --build --compiler "gfortran"

The TOML file has simple naming rules:

1[[trig]] # [name of lfortran_MODULE.f90]

2fname = "sin" # Function to generate test for; must be the same as for gfortran

3only = "dsin" # if not testing same function

4range = 20 # [-range, +range]

5stepsize = 0.01

As does the template:

1program {{ fname }}_test

2 use, intrinsic :: iso_fortran_env, only: sp => real32, dp => real64

3 use :: lfortran_intrinsic_trig, only: {{ fname }}2 => {{ fname }}

4 real(dp), parameter :: pi = 3.1415926535897932384626433832795_dp

5 real(dp) :: ir, gf, lf

6 print*, "Compiled with {{ compiler }}"

7 print*, "x ", "gfortran_{{fname}}(x) ", &

8 "lfortran_{{fname}}(x) ", "abs_error "

9 ir = -{{ range }}

10 do while (ir<={{ range }})

11 gf = {{ fname }}(ir)

12 lf = {{ fname }}2(ir)

13 print*, ir, gf, lf, abs(gf-lf)

14 ir = ir + {{ stepsize }}

15 end do

16end program

Conclusions

Rather than move forward with the closing comments; and going into more

detail about the manner in which further sollya based polynomial

approximations are being looked into; I will end this post here; as a

trailer and invitation to the closing report. This has been the last of

the weekly updates. This doesn’t mean anything will change other than

the format. I quite like keeping track of the work I do in this manner;

keeps me honest and not complacent 3. For those of you following

along; this has been a fun ride; for those who come to this in the

future; feel free to follow along from the beginning and drop comments

below.

References

Brisebarre, Nicolas, David Defour, Peter Kornerup, and Jean-Michel Muller. 2005. “A New Range-Reduction Algorithm.” IEEE TRANSACTIONS ON COMPUTERS 54 (3): 9.

Defour, P David, Peter Kornerup, Jean-Michel Muller, and Nathalie Revol. n.d. “A New Range Reduction Algorithm,” 13.

Goldberg, David. 1991. “What Every Computer Scientist Should Know about Floating-Point Arithmetic.” ACM Computing Surveys 23 (1): 5–48. https://doi.org/10.1145/103162.103163.

Muller, Jean-Michel. 2016. Elementary Functions. Boston, MA: Birkhäuser Boston. https://doi.org/10.1007/978-1-4899-7983-4.

Muller, Jean-Michel, Nicolas Brunie, Florent de Dinechin, Claude-Pierre Jeannerod, Mioara Joldes, Vincent Lefèvre, Guillaume Melquiond, Nathalie Revol, and Serge Torres. 2018. Handbook of Floating-Point Arithmetic. Cham: Springer International Publishing. https://doi.org/10.1007/978-3-319-76526-6.

Ng, K C. 2006. “Argument Reduction for Huge Arguments: Good to the Last Bit.” Introduction, 8.

Payne, Mary H., and Robert N. Hanek. 1983. “Radian Reduction for Trigonometric Functions.” ACM SIGNUM Newsletter 18 (1): 19–24. https://doi.org/10.1145/1057600.1057602.

From this stackoverflow post of course ↩︎

From this blog post by Bert Hubert ↩︎

I maintain similar notes for my research, but those sadly never see the light of day ↩︎

Series info

GSoC21: LFortran series

- GSoC21 W1: LFortran Kickoff

- GSoC21 W2: LFortran Unraveling

- GSoC21 W3: Kind, Characters, and Standards

- GSoC21 W4: LFortran, Backends and Bugs

- GSoC21 W5: LFortran Design Details and minidftatom

- GSoC21 W6: LFortran ASR and Values

- GSoC21 W7: LFortran Workflow Basics

- GSoC21 W8: LFortran Refactors, and Compile Time Evaluations

- GSoC21 W9: LFortran Bug Hunting Bonanza

- GSoC21 W10: LFortran Runtime Library Design <-- You are here!

- GSoC21 LFortran and Computational Chemistry